Twitter Feed

Review: Executive’s Guide to Cloud Computing by Eric Marks and Bob Lozano

Recently, I had the privilege of reviewing an advance copy of Executive’s Guide to Cloud Computing by Eric Marks and Bob Lozano. Available now for pre-order on Amazon, this guide is a…

DoD Cloud Computing Session at 5th International Cloud Expo

I’m happy to announce that I will be presenting on DoD Cloud Computing Advances at the 5th International Cloud Expo, April 19-21, 2010 at the Javits Convention Center in New…

InformationWeek Prediction: Cloud Computing for Classified Software

Yes, I know you’re sick of all the predictions, but I just can’t resist pointing you to Nick Hoover’s “5 Predictions For Government IT in 2010“. In summary: 1. Cybersecurity…

“Shaping Government Clouds” Just Released

As part of the On The Frontlines series, Trezza Media Group has just released it latest on-line electronic magazine. “Shaping Government Clouds” includes: Pete Tseronis, Chairman of the Federal Cloud…

Fed Tech Bisnow: If Nostradamus Did RFPs?

Nostradamus may no longer be with us, but check out the “beltway” predictions from Tech Bisnow! “Two hot trends almost all mentioned: early uptick on M&A and cloud computing ubiquity”…

Navy CANES and Cloud Computing

During the first quarter of 2010, the Navy is expected to make the first selection for the Consolidated Afloat Network Enterprise System (CANES). CANES is just one component of the…

GovCloud, “Cloud Musings” rated “Influential” by Topsy

Log in with Twitter A search engine powered by tweets My sincere appreciation and thanks goes out to Topsy for rating my tweets as “Influential”! Topsy is a new kind…

Jill Tummler Singer Appointed NRO CIO

Effective January 1, 2010, Jill Tummler Singer will take the reigns as CIO for the National Reconnaissance Office (NRO). As the CIA Deputy CIO, Ms Tummler has been a proponent…

Most Influential Cloud Bloggers for 2009

Thank you Ulitzer and SYS-CON Media for naming me to your list of the most influential cloud computing bloggers for 2009. My hearty congratulations go out to the other bloggers…

2009: The Government Discovers Cloud Computing

2009 was truly a watershed year for Federal information technology professionals. After inaugurating the first Cyber-President we saw the appointment of our first Federal CIO and the rapid adoption of…

Today data has replaced money as the global currency for trade.

“McKinsey estimates that about 75 percent of the value added by data flows on the Internet accrues to “traditional” industries, especially via increases in global growth, productivity, and employment. Furthermore, the United Nations Conference on Trade and Development (UNCTAD) estimates that about 50 percent of all traded services are enabled by the technology sector, including by cross-border data flows.”

As the global economy has become fully dependent on the transformative nature of electronic data exchange, its participants have also become more protective of data’s inherent value. The rise of this data protectionism is now so acute that it threatens to restrict the flow of data across national borders. Data-residency requirements, widely used to buffer domestic technology providers from international competition, also tends to introduce delays, cost and limitations to the exchange of commerce in nearly every business sector. This impact is widespread because it is also driving:

- Laws and policies that further limit the international exchange of data;

- Regulatory guidelines and restrictions that limit the use and scope of data collection; and

- Data security controls that route and allow access to data based on user role, location and access device.

A direct consequence of these changes is that the entire business enterprise spectrum is now faced with the challenge of how to classify and label this vital commerce component.

|

|

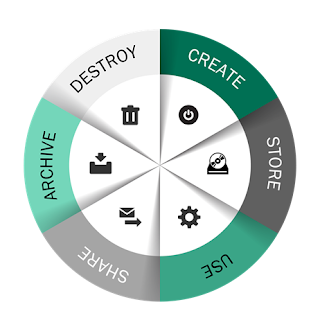

Figure 1– The data lifecycle

|

The challenges posed here are immense. Not only is there an extremely large amount of data being created everyday but businesses still need to manage and leverage their huge store of old data. This stored wealth is not static because every bit of data possesses a lifecycle through which it must be monitored, modified, shared, stored and eventually destroyed. The growing adoption and use of cloud computing technologies layers even more complexity to this mosaic. Another widely unappreciated reality being highlighted in boardrooms everywhere is how these changes are affecting business risk and internal information technology governance. Broadly lumped into cybersecurity, the sparsity of legal precedent in this domain is coupled almost daily with a need for headline driven, rapid fire business decisions.

To deal with this new reality, enterprises must standardize and optimize the complexity associated with managing data. Success in this task mandates a renewed focus on data classification, data labeling and data loss prevention. Although these data security precautions have historically been

glossed over as too expensive or too hard, the penalties and long term pain associated with a data breach incident has raised the stakes considerably. According the Global Commission on Internet Governance, the average financial cost of a single data breach could exceed $12,000,000 [1] , which includes:

- Organizational costs: $6,233,941

- Detection and Escalation Costs: $372,272

- Response Costs: $1,511,804

- Lost Business Costs: $3,827,732

- Victim Notification Cost: $523,965

So is adequate data classification still just simply a bridge too far?

While the competencies required to implement an effective data management program are significant, they are not impossible. Relevant skillsets are, in fact, foundational to the deployment of modern business automation which, in turn, represents the only economical path towards streamlining repeatable processes and reducing manual tasks. Minimum steps include:

- Improving enterprise awareness around the importance of data classification

- Abandoning outdated or realistic classification schemes in order to adopt less complex ones

- Clarifying organizational roles and responsibilities while simultaneously removing those that have been tailored to individuals

- Focus on identifying and classifying data, not data sets.

- Adopt and implement a dynamic classification model.[2]

The modern enterprise must either build these competencies in-house or work with a trusted third party to move through these steps. Since the importance of data will only increase, the task of implementing a modern data classification and modeling program is destined to become even more business critical.

( This post was brought to you by IBM Global Technology Services. For more content like this, visit Point B and Beyond.)

[2] Recommended steps adapted from “Rethinking Data Discovery And Data Classification by Heidi Shey and John Kindervag, October 1, 2014, available from IBM at https://www-01.ibm.com/common/ssi/cgi-bin/ssialias?htmlfid=WVL12363USEN

( Thank you. If you enjoyed this article, get free updates by email or RSS – © Copyright Kevin L. Jackson 2015)

Cloud Computing

- CPUcoin Expands CPU/GPU Power Sharing with Cudo Ventures Enterprise Network Partnership

- CPUcoin Expands CPU/GPU Power Sharing with Cudo Ventures Enterprise Network Partnership

- Route1 Announces Q2 2019 Financial Results

- CPUcoin Expands CPU/GPU Power Sharing with Cudo Ventures Enterprise Network Partnership

- ChannelAdvisor to Present at the D.A. Davidson 18th Annual Technology Conference

Cybersecurity

- Route1 Announces Q2 2019 Financial Results

- FIRST US BANCSHARES, INC. DECLARES CASH DIVIDEND

- Business Continuity Management Planning Solution Market is Expected to Grow ~ US$ 1.6 Bn by the end of 2029 - PMR

- Atos delivers Quantum-Learning-as-a-Service to Xofia to enable artificial intelligence solutions

- New Ares IoT Botnet discovered on Android OS based Set-Top Boxes